Designing Finley

Company: Jiffy.AI, Palo Alto, California

Role: Strategy & research, interface design, prompt engineering

THE SOCIETAL PROBLEM

Why it's worth tackling one of the most challenging conversations an LLM can have

Financial advice conversations are highly complex and heavily regulated. Many have said it's a topic too far for LLMs.

However… we have a looming retirement crisis and a huge financial advice gap.

So, if there is any way a technology can be used to increase access to personal financial, we should harness it.

OUTCOME

After a year of intense research we have Finley.

A regulator–ready, AI financial advisor that keeps the human advisor ‘in-the-loop'

Released to alpha users and soon to be adopted by an advisory in California

Check out the whitepaper for an in-depth write up of exploration into LLM Orchestration, Prompt Engineering, Voice Models and Multi-modal conversations.

Internal video made during development

THE PROCESS

A deeper dive

Three main problems

HUMAN

Will the user feel comfortable having a conversation in this way?

TECHNICAL

Can an LLM deal with the complexity of financial advice conversations?

INSTITUTIONAL

Can this be delivered within regulatory requirements?

What makes a good financial advisor?

Professional standards

I drew on established principles from professional bodies such as the CFP, and translated those requirements into expectations for system behaviour – Communication, Competence, Integrity [see whitepaper for further breakdown]

These standards were used as design principles. It shaped how Finley should (and shouldn’t) behave, and where human oversight was required.

Design decisions were assessed not only for usability or feasibility, but for whether a licensed advisor could confidently stand behind them.

User needs

Clients using Finley are not financial experts. They need to be able to speak naturally about their money, ask basic questions, see things on screen, explore scenarios, and change direction without feeling rushed or exposed – just like they would with a human Financial advisor.

I studied the makeup of a good voice conversation (listening, intonation, pacing), benchmarked existing voice tools and interviewed potential users.

This led to several desired experiences, with human sounding voices, multi-modal support, Advisor-in-the-loop and memory management being just a few [Full breakdown in whitepaper]

This was not a straightforward translation of principles into a built experience. Many of the behaviours we were aiming for were difficult to achieve reliably at the outset.

Some only became possible as voice models, orchestration frameworks, and latency improved.

The conversation design therefore evolved alongside the technology, with approaches tested, adjusted, and sometimes abandoned as constraints became clearer, both technically and experientially.

Alongside the conversational experience, I developed a lightweight UI design system to bring consistency to a product in flux. This included repeatable patterns for conversation states, graphs and widgets, ensuring the interface remained coherent even as capabilities changed.

We used Retool to support rapid prompt iteration, enabling design and engineering to test assumptions and refine interactions as technical constraints shifted.

SOLUTIONS DEVELOPED

To help users feel comfortable interacting with an LLM:

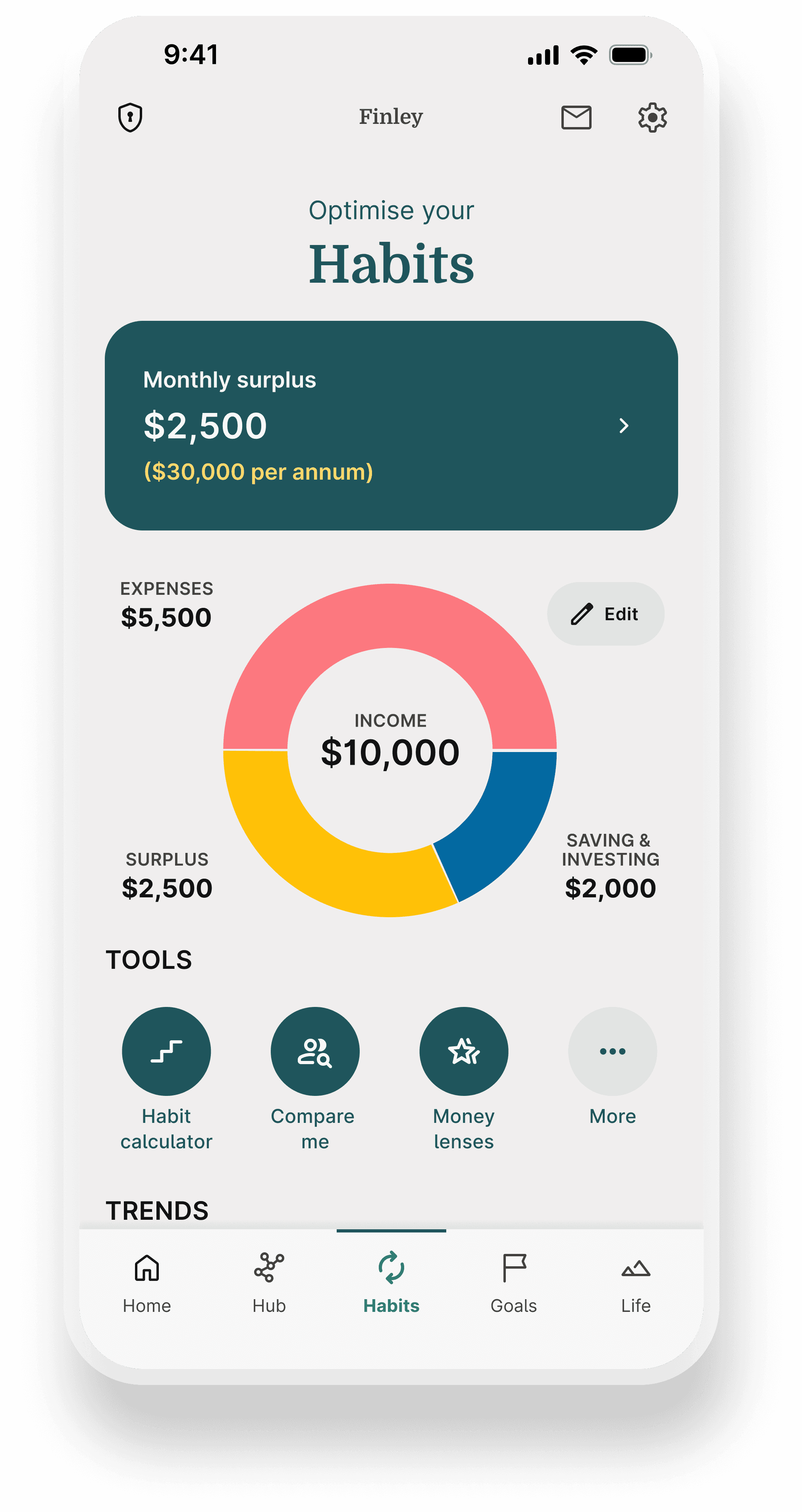

An intuitive interface

The design of the interface mirrors being on a zoom with a human advisor i.e. You can see him on screen (or his avatar); he shares visuals on screen, and helps you understand trade-offs.

Natural voice and flowing conversation

We tested text-to-speech voice providers. Each were tested for natural speed, ease of integration with the AI’s text output, latency, and ability to support a custom voice. The standout choice was the eleven_turbo_v2_5 model

We introduced a pre-processing step, including text cleanup, numerical formatting, prompt tuning and optimized chunking to optimise the speech and ensure it read out complex financial information correctly.

Full data transparency and control

The 'Information Vault' shows the user the data gathered by Finley, and is a space where they can edit and add. It supports trust, informed client engagement (while also enabling human advisors to assess whether advice is grounded in appropriate and current information). It combines 'hard facts' that have been explicitly asked, which softer details – extra contextual information Finley has gathered.

To ensure LLMs manage complex conversation:

Topics are orchestrated behind-the-scenes

We used an Agentic Orchestration approach. Agents take responsibility for particular conversations or topics, handing off context as needed. Step-based prompts defined when the conversation should advance, or pause, preventing premature conclusions and maintaining conversational coherence. For more detail check our the whitepaper

To deliver on regulation and compliance:

A licensed advisor in-the-loop

Finley operates within a supervised advisory model, where a licensed human advisor signs off before any regulated actions and remains accountable for outcomes. Oversight was designed directly into the flow, with structured review artefacts generated when regulated decisions are reached and before any actions. This allows advisors to review, approve, and remain responsible without needing to be present in the live conversation.

A four-step AI compliance architecture

As a team we developed a compliance framework anchored in a 4-Level AI Architecture that ensures every conversation is suitable, auditable, and client-first.

Plus, support clients throughout their financial life

Financial advice unfolds over time. Finley supports this through a persistent app experience that brings a client’s financial life into a single, visible, discussable system. Goals, conversation tracks, open banking, and news alerts help clients re-engage as trust builds, circumstances change and decisions evolve.

IN SUMMARY